AI lawsuit investigation: Did AI misinformation cause physical, emotional or psychological harm? – Class Action Lawsuits

Report on the Accountability of Artificial Intelligence Platforms and Alignment with Sustainable Development Goals

The proliferation of Artificial Intelligence (AI) platforms, including ChatGPT, Gemini, Grok, and Copilot, presents significant challenges to global development objectives. An investigation is underway to assess the accountability of AI companies for instances where misinformation generated by their platforms has resulted in physical injury, psychological trauma, or death. This report examines the implications of AI-generated harm in the context of the United Nations Sustainable Development Goals (SDGs).

The Impact of AI-Generated Misinformation on Public Health and Well-being (SDG 3)

The dissemination of inaccurate information by AI systems poses a direct threat to SDG 3, which aims to ensure healthy lives and promote well-being for all. When users rely on AI for critical guidance, the consequences of misinformation can be severe, undermining public health outcomes.

Categories of Harm

- Physical Injury: Occurs when individuals follow inaccurate AI-generated advice related to health, medication, or personal safety.

- Emotional and Psychological Harm: Includes distress, anxiety, or reputational damage resulting from AI-generated defamation, harassment, or impersonation.

- Severe Trauma and Self-Harm: Arises from emotional distress triggered by harmful AI content, such as deepfakes or manipulative messaging, which can lead to traumatic experiences or actions.

Pursuing Justice and Corporate Accountability in the Digital Age (SDG 16)

Holding technology companies accountable for the products they develop is a critical component of SDG 16, which focuses on promoting peace, justice, and strong institutions. Legal actions against AI companies seek to establish frameworks for corporate responsibility and provide access to justice for those harmed.

The Role of Legal Frameworks

Legal investigations into AI-related harm are a mechanism for upholding the rule of law and ensuring equal access to justice, as outlined in SDG 16.3. These actions challenge the adequacy of standard user disclaimers and aim to establish a precedent for accountability when AI outputs lead to demonstrable harm.

Establishing Corporate Responsibility

The investigation seeks to hold corporations accountable for the information their technology produces. This aligns with the objective of SDG 16 to build effective and accountable institutions, extending this principle to private sector entities that wield significant influence over public information and safety.

Fostering Responsible Innovation for Sustainable Development (SDG 9)

While AI is a powerful driver of innovation, its development must be managed responsibly to align with SDG 9, which calls for building resilient infrastructure and promoting inclusive and sustainable industrialization. The risks associated with AI misinformation highlight the need for ethical guardrails in technological advancement.

The Challenge of Unregulated Innovation

AI platforms generate responses based on data patterns rather than verified facts, creating significant risks when used for critical decisions in medical, financial, or legal matters. Ensuring that innovation serves humanity requires governance structures that mitigate such risks and prioritize user safety over unchecked technological deployment.

Criteria for Legal Investigation

An individual may have grounds for a claim if their case meets certain criteria. The investigation is examining incidents where:

- An individual relied on an AI platform such as ChatGPT, Copilot, Gemini, or Grok.

- The individual suffered documented physical or emotional harm, including self-harm or attempted suicide, within 60 days of the interaction.

- A clear connection can be established between the AI-generated misinformation and the subsequent harm, supported by evidence like chat logs and medical records.

Analysis of Sustainable Development Goals in the Article

1. Which SDGs are addressed or connected to the issues highlighted in the article?

The article highlights issues that are directly connected to the following Sustainable Development Goals (SDGs):

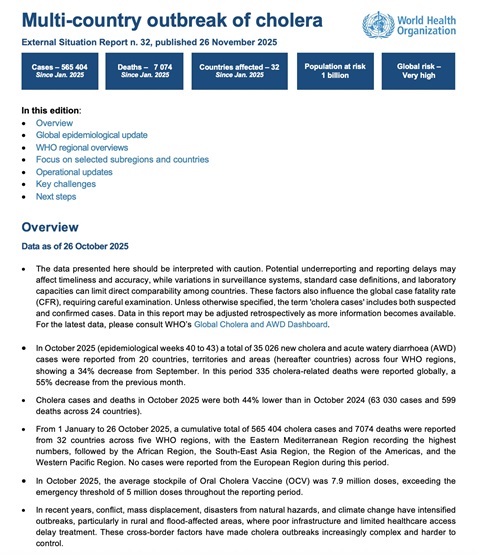

- SDG 3: Good Health and Well-being: The core issue discussed is the “physical or emotional harm,” “injury, trauma or death” caused by relying on AI-generated misinformation. The article explicitly mentions “medical treatment, emotional trauma,” “physical injury,” “emotional or psychological harm,” “distress, anxiety,” and “self-harm or trauma,” all of which fall under the purview of ensuring healthy lives and promoting well-being.

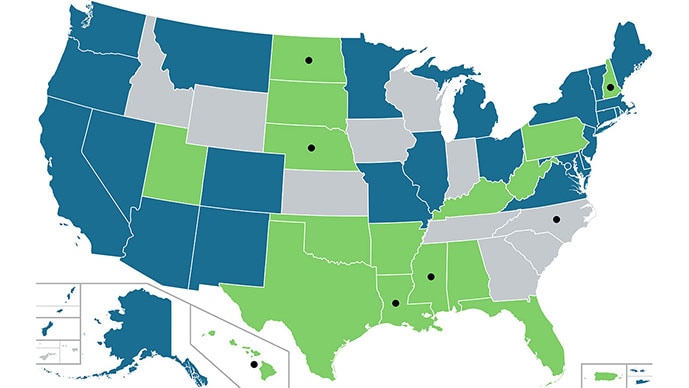

- SDG 16: Peace, Justice and Strong Institutions: The article is a call to action for a lawsuit to “hold AI companies accountable.” This directly relates to promoting the rule of law, ensuring access to justice for all, and building effective, accountable institutions. The lawsuit investigation is a mechanism for seeking justice and redress for the harm caused.

2. What specific targets under those SDGs can be identified based on the article’s content?

Based on the article’s content, the following specific SDG targets can be identified:

- Target 3.4: By 2030, reduce by one third premature mortality from non-communicable diseases through prevention and treatment and promote mental health and well-being.

- Explanation: The article’s focus on “emotional or psychological harm,” “distress, anxiety,” “self-harm or trauma,” and “attempted suicide” directly addresses the promotion of mental health and well-being. The harm caused by AI misinformation is presented as a direct threat to individuals’ mental and physical health.

- Target 16.3: Promote the rule of law at the national and international levels and ensure equal access to justice for all.

- Explanation: The entire article is framed around an “AI lawsuit investigation” that allows individuals who have suffered harm to “pursue compensation” and “hold companies accountable.” This initiative is a clear example of providing a legal pathway for victims to seek justice.

- Target 16.10: Ensure public access to information and protect fundamental freedoms, in accordance with national legislation and international agreements.

- Explanation: The article addresses the negative consequences of unreliable information. It states that AI can provide information that is “inaccurate or misleading” and “outright incorrect.” The lawsuit seeks to protect the fundamental freedom from harm caused by such misinformation, thereby highlighting the need for accountability in the dissemination of public information by new technologies.

3. Are there any indicators mentioned or implied in the article that can be used to measure progress towards the identified targets?

Yes, the article mentions or implies several indicators that can be used to measure progress:

- For Target 3.4:

- The article explicitly lists types of harm that can be tracked as indicators of negative impacts on well-being: “Physical injury,” “Emotional or psychological harm,” “Distress, anxiety,” “reputational damage,” “Self-harm or trauma,” and “attempted suicide.” The mention of “attempted suicide” directly relates to indicator 3.4.2 (Suicide mortality rate). The number of individuals seeking “medical treatment” or experiencing “emotional trauma” due to AI misinformation can serve as a direct measure.

- For Target 16.3:

- The primary indicator is the ability of affected individuals to seek legal recourse. The article’s call to “join the artificial intelligence lawsuit” and the number of people who “qualify to join” and “pursue compensation” serve as a direct indicator of access to justice for this specific issue.

- For Target 16.10:

- An implied indicator is the number of documented cases of harm resulting from AI misinformation. The article suggests that these incidents can be measured and verified through “documentation — such as chat logs, medical reports or treatment records,” which provides a tangible way to track the prevalence and impact of harmful, inaccurate information.

4. SDGs, Targets, and Indicators Table

| SDGs | Targets | Indicators (as identified in the article) |

|---|---|---|

| SDG 3: Good Health and Well-being | Target 3.4: Promote mental health and well-being. |

|

| SDG 16: Peace, Justice and Strong Institutions | Target 16.3: Ensure equal access to justice for all. |

|

| Target 16.10: Ensure public access to information and protect fundamental freedoms. |

|

Source: topclassactions.com

What is Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0