Governing Health -Compensation Considerations for Health System Innovation Activities [Podcast] – The National Law Review

![Governing Health -Compensation Considerations for Health System Innovation Activities [Podcast] – The National Law Review](https://natlawreview.com/sites/default/files/styles/article_image/public/2025-10/Health AI Security Privacy Data Cyber Medical Doctor-309772690.jpg.webp?itok=i51uHMDx#)

Report on the State of Artificial Intelligence in U.S. Healthcare: 2025

Introduction: Aligning Technological Advancement with Sustainable Development Goals

The integration of artificial intelligence (AI) into the United States healthcare sector is advancing at an unprecedented rate, presenting transformative opportunities and significant challenges. This evolution directly impacts the pursuit of the United Nations Sustainable Development Goals (SDGs), particularly SDG 3 (Good Health and Well-being), SDG 9 (Industry, Innovation, and Infrastructure), and SDG 10 (Reduced Inequalities). As of 2025, the convergence of AI innovation with regulatory, market, and ethical considerations requires a strategic approach to ensure technology serves as an enabler of sustainable and equitable health outcomes for all.

Regulatory Frameworks and Institutional Governance (SDG 16)

The development of effective, accountable, and transparent institutions, a core target of SDG 16 (Peace, Justice, and Strong Institutions), is central to governing healthcare AI. The current regulatory environment is fragmented, struggling to balance patient safety with rapid innovation.

Federal Oversight and Adaptation

The U.S. Food and Drug Administration (FDA) has authorized nearly 800 AI- and machine learning (ML)-enabled medical devices in the five years preceding September 2024. This proliferation challenges traditional regulatory models designed for static devices. In response, the FDA is adapting its framework to accommodate dynamic, learning algorithms.

- Predetermined Change Control Plans (PCCPs): These plans allow manufacturers to modify AI systems within predefined parameters without requiring new premarket submissions, fostering controlled innovation.

- AI/ML-based SaMD Action Plan (January 2021): This plan outlines a total product life cycle approach focused on regulatory tailoring, good machine learning practices (GMLP), patient-centricity, bias elimination, and real-world performance monitoring.

- Regulatory Uncertainty: Shifting federal priorities, exemplified by the rescission and replacement of executive orders on AI, have created an uncertain landscape for long-term governance, although agency-level actions, such as the ACA Section 1557 final rule, continue to influence policy.

State-Level Legislative Patchwork

In the absence of comprehensive federal legislation, states are creating their own regulatory frameworks. During the 2024 session, 45 states introduced AI-related bills. This patchwork of state-specific requirements, such as California’s Assembly Bill 3030 on generative AI, complicates compliance for developers and providers operating across jurisdictions, potentially hindering the scalable deployment of innovations needed to achieve SDG 9.

Advancing Health Equity and Reducing Inequalities (SDG 3 & SDG 10)

A primary challenge for healthcare AI is its potential to either reduce or exacerbate health disparities, directly impacting SDG 10 (Reduced Inequalities) and the universal health coverage ambitions of SDG 3.

The Challenge of Algorithmic Bias

The performance of AI systems is contingent on the quality and representativeness of training data. Significant data gaps threaten to perpetuate systemic biases.

- A 2024 review of 692 FDA-approved AI/ML devices revealed critical demographic shortfalls:

- Only 3.6% reported race and ethnicity data.

- 99.1% provided no socioeconomic information.

- 81.6% failed to report the ages of study subjects.

AI models trained on such nonrepresentative datasets risk delivering inequitable outcomes for vulnerable populations, including racial and ethnic minorities, the elderly, and individuals with disabilities. While regulations like the ACA Section 1557 final rule mandate nondiscrimination, enforcement remains uncertain amid shifting political priorities. This underscores the urgent need for standardized methodologies for bias detection and mitigation to ensure AI contributes positively to health equity.

Data Privacy and Security: The HIPAA Challenge

The Health Insurance Portability and Accountability Act (HIPAA) predates modern AI, creating compliance challenges. The use of AI for clinical documentation and decision support requires access to extensive patient data, raising privacy concerns. Building patient trust through robust data governance is essential for the strong institutions envisioned in SDG 16.

- Risk Management: Proposed HHS regulations require covered entities to include AI tools in risk analysis, mandating regular vulnerability scanning and penetration testing.

- Business Associate Agreements (BAAs): Contracts with AI vendors must now address AI-specific risks, including algorithm updates, data retention policies, and security for ML processes, to safeguard protected health information (PHI).

Innovation, Infrastructure, and Economic Impact (SDG 9 & SDG 8)

The development and deployment of healthcare AI are key drivers of SDG 9 (Industry, Innovation, and Infrastructure) and contribute to SDG 8 (Decent Work and Economic Growth).

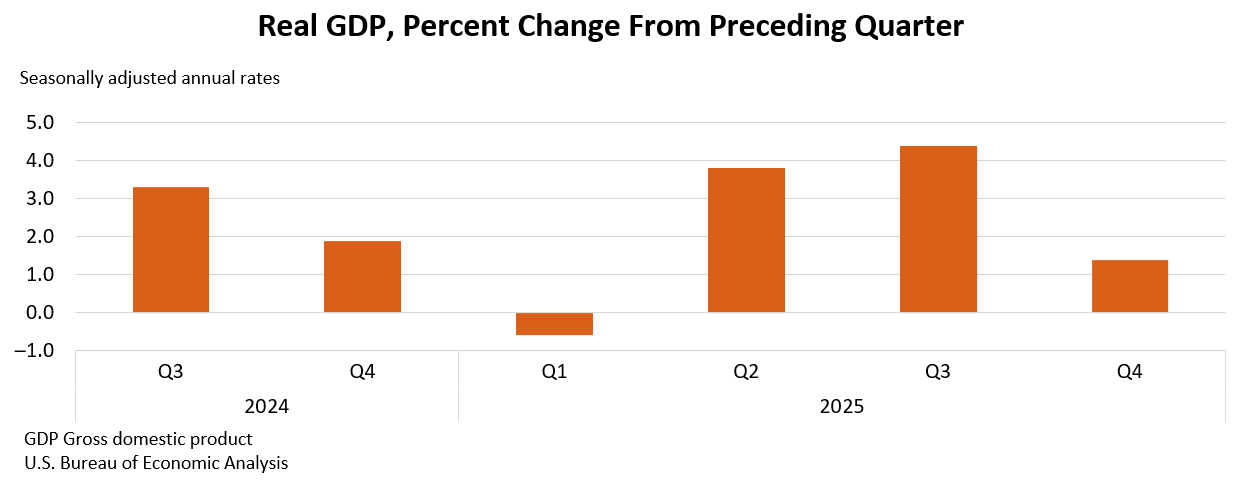

Market Dynamics and Investment Trends

Despite regulatory uncertainty, venture capital investment in healthcare AI remains strong, albeit more selective. Investors are focusing on solutions with clear clinical value and a viable path to regulatory compliance. An early 2025 survey indicated that 81% of digital health leaders hold a positive outlook for investment. Key areas of investment include:

- Clinical workflow optimization

- Value-based care enablement

- Revenue cycle management

Major technology corporations, large health systems, and pharmaceutical companies are also making substantial investments, integrating AI to accelerate drug development, streamline operations, and pilot emerging technologies, thereby building the resilient healthcare infrastructure central to SDG 9.

Emerging Technologies and Integration

The rise of generative AI presents new regulatory hurdles, as its ability to create synthetic content requires novel validation approaches. The integration of AI with other technologies like robotics and the Internet of Medical Things (IoMT) adds further complexity but also offers significant potential for remote monitoring and chronic disease management, advancing the accessibility goals of SDG 3.

Professional Liability and Human-Centric Implementation

Ensuring that AI is implemented responsibly requires clear standards for professional liability, robust cybersecurity, and a commitment to human oversight, reinforcing the principles of accountability within SDG 16.

Liability and Standards of Care

AI’s role in clinical decision-making complicates traditional medical malpractice frameworks. Determining causation and breach of duty when an algorithm is involved presents a novel legal challenge. Recommendations from the Federation of State Medical Boards aim to hold clinicians liable for AI-related errors, emphasizing the importance of professional judgment. Detailed documentation of AI use and the clinical reasoning behind decisions is now essential for demonstrating accountability.

Cybersecurity and Resilient Infrastructure

Healthcare data is a primary target for cyberattacks, and AI systems introduce new vulnerabilities. This threat undermines the stability of healthcare systems and the goal of building resilient infrastructure under SDG 9. The Consolidated Appropriations Act of 2023 now requires cybersecurity information in premarket submissions for connected medical devices. Comprehensive security programs that address technical and human factors are critical.

The Role of Human Oversight

Regulatory schemes consistently place ultimate responsibility on the healthcare providers and organizations implementing AI. Meaningful human involvement in the decision-making process is a core principle. AI systems must be designed to augment, not replace, clinical judgment, with clear mechanisms for providers to override algorithmic recommendations. This human-in-the-loop approach is fundamental to ensuring technology serves human well-being.

Conclusion: A Balanced Path Towards Sustainable Healthcare Innovation

The trajectory of AI in U.S. healthcare offers immense potential to advance the Sustainable Development Goals by improving diagnostics, enhancing efficiency, and expanding access to care. However, realizing this potential requires navigating a complex landscape of regulatory, ethical, and technical challenges. Achieving a future where AI promotes equitable and sustainable health outcomes demands a collaborative approach that prioritizes patient safety, health equity, and institutional integrity. Organizations that successfully balance innovation with responsibility will lead the transformation of American healthcare delivery in alignment with global sustainability targets.

Analysis of Sustainable Development Goals in the Article

1. Which SDGs are addressed or connected to the issues highlighted in the article?

-

SDG 3: Good Health and Well-being

- The article’s central theme is the application of AI in healthcare to improve diagnostic accuracy, enhance clinical efficiency, and expand access to care, which directly aligns with ensuring healthy lives and promoting well-being. It also addresses significant challenges to this goal, such as algorithmic bias that can lead to health disparities.

-

SDG 9: Industry, Innovation, and Infrastructure

- The text extensively discusses technological innovation, research, and development in the healthcare sector. It highlights the rapid proliferation of AI-enabled medical devices, venture capital investment in AI startups, and the role of major technology corporations in building the infrastructure for AI in healthcare.

-

SDG 10: Reduced Inequalities

- A major section of the article is dedicated to “Algorithmic Bias and Health Equity Concerns.” It details how AI systems trained on nonrepresentative datasets can perpetuate or worsen existing healthcare disparities among vulnerable populations, including racial and ethnic minorities, the elderly, and individuals with disabilities, directly connecting to the goal of reducing inequality.

-

SDG 16: Peace, Justice, and Strong Institutions

- The article explores the challenges faced by regulatory bodies like the FDA in creating effective, accountable, and transparent institutions to govern AI in healthcare. It discusses the development of new regulatory frameworks, guidance documents, and the legal complexities surrounding liability, all of which are central to building strong institutions.

2. What specific targets under those SDGs can be identified based on the article’s content?

-

Under SDG 3: Good Health and Well-being

- Target 3.8: Achieve universal health coverage, including financial risk protection, access to quality essential health-care services… for all. The article discusses how AI has the potential to “expand access to specialized care” and “improve diagnostic accuracy,” which are key components of quality healthcare services. However, it also warns that algorithmic bias could undermine the “for all” aspect of this target by creating disparities in care.

-

Under SDG 9: Industry, Innovation, and Infrastructure

- Target 9.5: Enhance scientific research, upgrade the technological capabilities of industrial sectors… encouraging innovation. The article directly reflects this target by describing the “unprecedented pace” of AI evolution in healthcare, the authorization of nearly 800 AI-enabled medical devices, and robust venture capital investment aimed at developing innovative solutions.

-

Under SDG 10: Reduced Inequalities

- Target 10.3: Ensure equal opportunity and reduce inequalities of outcome, including by eliminating discriminatory… policies and practices. The article addresses this target by highlighting the ACA Section 1557 final rule, which requires healthcare entities to ensure AI systems do not discriminate. The discussion on the need for bias testing and mitigation strategies is a direct effort to prevent discriminatory outcomes in healthcare.

-

Under SDG 16: Peace, Justice, and Strong Institutions

- Target 16.6: Develop effective, accountable and transparent institutions at all levels. The article details the FDA’s efforts to adapt its regulatory frameworks for AI, such as publishing “comprehensive draft guidance” and developing “predetermined change control plans (PCCPs).” The emphasis on “explainable AI” and transparency to allow clinicians to understand algorithmic recommendations also supports this target.

3. Are there any indicators mentioned or implied in the article that can be used to measure progress towards the identified targets?

-

For SDG 9 (Target 9.5 – Innovation)

- Indicator: Number of AI- and ML-enabled medical devices authorized for marketing. The article explicitly states that “nearly 800 AI- and machine learning (ML)-enabled medical devices authorized for marketing by the US Food and Drug Administration (FDA) in the five-year period ending September 2024.” This provides a direct, quantifiable measure of innovation in the sector.

- Indicator: Amount of venture capital investment in healthcare AI. The article notes that “venture capital investment in healthcare AI remains robust, with billions of dollars allocated to startups,” which can be tracked as a measure of financial commitment to innovation.

-

For SDG 10 (Target 10.3 – Reduced Inequalities)

- Indicator: Percentage of approved AI medical devices reporting demographic data in clinical studies. The article provides specific data from a 2024 review: “only 3.6% of approved devices reporting race and ethnicity data, 99.1% providing no socioeconomic information, and 81.6% failing to report study subject ages.” Tracking these percentages over time would measure progress in ensuring training datasets are representative.

-

For SDG 16 (Target 16.6 – Strong Institutions)

- Indicator: Number of states introducing AI-related legislation. The article mentions that “45 states introducing AI-related legislation during the 2024 session,” which serves as an indicator of institutional and legislative response to the challenges of AI.

- Indicator: Development and publication of regulatory guidance. The article refers to the FDA’s “January publication of comprehensive draft guidance,” which is a tangible output demonstrating institutional efforts to create clear and accountable regulatory pathways.

4. Table of SDGs, Targets, and Indicators

| SDGs | Targets | Indicators |

|---|---|---|

| SDG 3: Good Health and Well-being | 3.8: Achieve universal health coverage and access to quality essential health-care services for all. | (Implied) Measures of diagnostic accuracy, clinical efficiency, and equitable access to specialized care across different demographic groups. |

| SDG 9: Industry, Innovation, and Infrastructure | 9.5: Enhance scientific research, upgrade technological capabilities, and encourage innovation. |

|

| SDG 10: Reduced Inequalities | 10.3: Ensure equal opportunity and reduce inequalities of outcome by eliminating discriminatory practices. |

|

| SDG 16: Peace, Justice, and Strong Institutions | 16.6: Develop effective, accountable and transparent institutions at all levels. |

|

Source: natlawreview.com

What is Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

;Resize=620#)